You’ve probably clicked “report” on a post at least once. Maybe it was spam. Maybe it was offensive. But have you ever wondered what happens after that?

This is where content moderation steps in. It turns a simple report into real action. It also helps keep online platforms safe.

For Employers and platform owners, moderation isn’t just about protecting users. It also protects your brand. It builds trust. It creates safe spaces where communities can grow.

Today, content is uploaded every second. That’s why moderation must be fast, accurate, and scalable.

That’s where Microworkers comes in.

What Makes Microworkers Different for Moderation Campaigns

- Human + Technology Synergy

AI tools are fast. However, they often miss context. A sarcastic joke, a cultural reference, or a borderline image can slip through automation. Microworkers adds the human touch. Real people make better decisions when machines fail. Together, people and structured microtasks create moderation that is both flexible and accurate. - Cultural Sensitivity at Scale

What’s normal in one country may be offensive in another. A gesture, symbol, or phrase can have very different meanings. Microworkers’ global workforce ensures moderation that respects cultural differences. - Rapid Deployment

Hiring and training internal teams takes months. Instead, Microworkers lets Employers launch campaigns right away. Tasks can go live in minutes. Results start coming in within hours. This speed is perfect for platforms that can’t afford delays. - Customizable Workflows

Every business has its own standards. We give Employers control over how tasks are structured. From simple approve/reject tasks to multi-level reviews, campaigns can be tailored to Employer needs.

5. Actionable Insights

Moderation is not only about blocking harmful content. It is also about learning from data. Employers can track common violations. They can also study user trends and improve policies. This makes moderation a strategic tool, not just a defensive one.

Who Needs Content Moderation?

Content moderation is not limited to social media platforms. In fact, many industries need it:

- E-commerce: Prevent counterfeit products, fake reviews, or offensive listings.

- Online communities & forums: Keep discussions respectful and free from harassment.

- Advertising platforms: Ensure ad creatives don’t contain misleading or harmful material.

- Gaming platforms: Filter out toxic behavior, offensive chat, or harmful content.

- Marketplaces & gig platforms: Verify user-generated content for compliance and trust.

- Education platforms: Protect learners from inappropriate images or harmful visuals.

In each of these cases, moderation directly impacts brand reputation and user trust.

Practical Use Cases for Employers

Content moderation isn’t one-size-fits-all. Different industries face unique challenges depending on the type of content their users create and share. To give Employers a clearer picture, here are several sample scenarios showing how Microworkers could support moderation efforts across different platforms and sectors.

- E-commerce Platform Cleaning Up Listings

A marketplace faces fake goods, offensive photos, and spam reviews. Microworkers helps clean listings quickly. This restores buyer trust and reduces the need for extra staff.

- Gaming Platform Fighting Toxic Behavior

A gaming platform struggles with offensive chat and harassment. Automated filters fail, but Microworkers’ workforce reviews flagged chat logs, categorizing them for moderation. The result: a cleaner, safer community that boosts player engagement.

- Social Media Startup Moderating Image Uploads

A new photo-sharing app grows rapidly but soon faces inappropriate uploads—nudity, violent scenes, and offensive gestures. With Microworkers’ ready templates, harmful content is flagged and removed within hours, keeping the platform safe and user-friendly.

- News & Media Site Managing User Photos

A news site that allows community photo submissions risks receiving graphic or offensive visuals. With Microworkers, images could be screened quickly so editors only work with safe content.

- Online Learning Platform Protecting Students

An online education platform allows students and teachers to upload images for class projects, discussion boards, and collaborative activities. Over time, some inappropriate visuals slip in—nudity, violent imagery, or offensive symbols—creating risks for the platform’s credibility and learner safety.

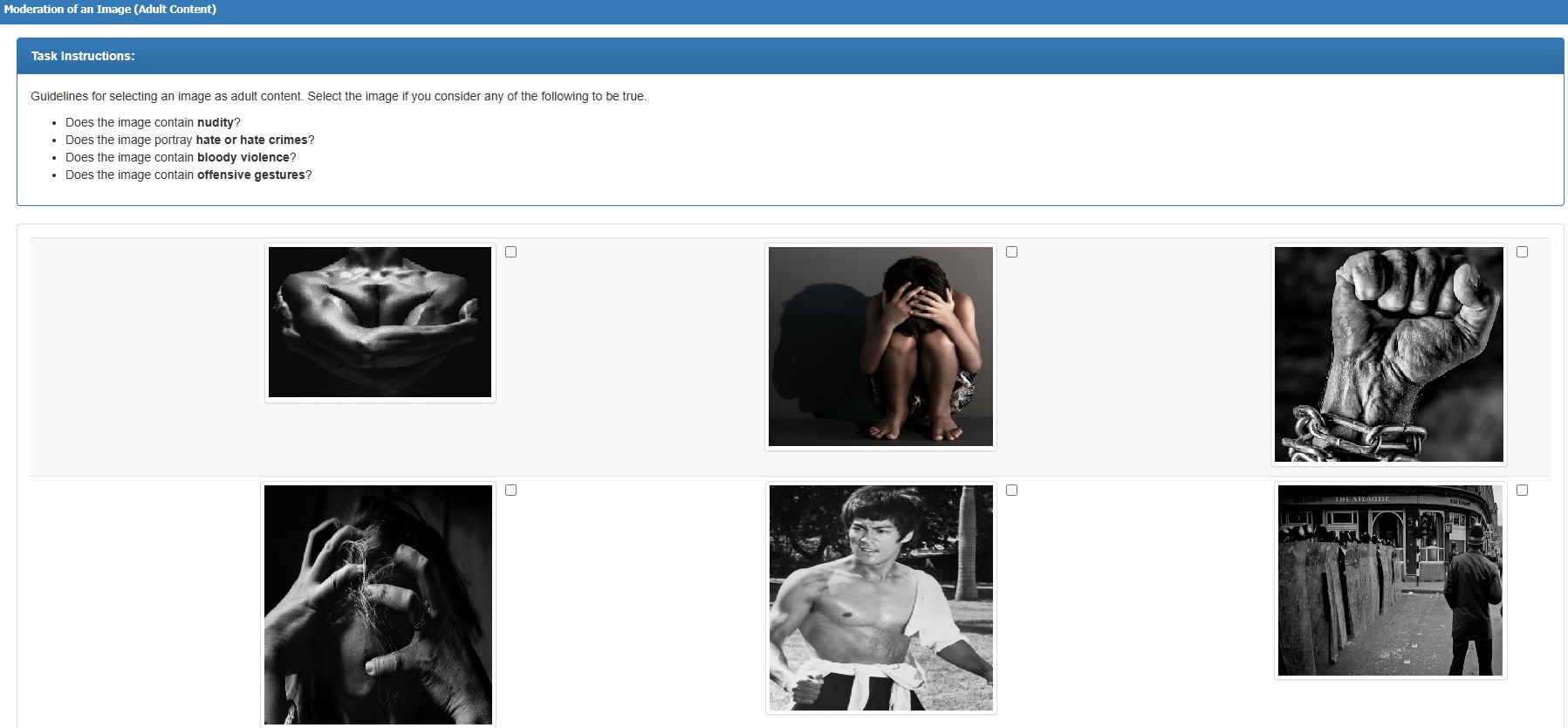

Using Microworkers’ Default Moderation Template

To make it even easier for Employers to get started, Microworkers provides a ready-to-use Adult Content Image Template. It simplifies setup while guiding workers with clear rules.

The default template guides workers to classify images based on four specific checks:

- Does the image contain nudity?

- Does the image portray hate or hate crimes?

- Does the image contain bloody violence?

- Does the image show offensive gestures?

When Employers preview the template, they will see:

📋 Task Instructions Box – A simple set of yes/no criteria that workers must follow.

🖼️ Sample Images Section – Images displayed below the instructions for workers to evaluate.

✅ Worker Selection Panel – Where workers make their choice based on the provided guidelines.

This design keeps the process straightforward: workers read the instructions, review the image, and select whether it matches any of the flagged categories.

👉 For Employers, this means you don’t have to create moderation criteria from scratch. You can launch campaigns immediately using the default template and customize it later as your platform’s needs evolve.

Tutorial: How to Customize the Default Template

Want to see customization in action? Check out this video walkthrough where we use Microworkers’ Smart Builder to tweak the default template:

In the tutorial, you’ll watch how to:

- Open the default template in the Smart Builder

- Add or modify classification criteria (e.g. new categories, extra checks)

- Change the wording of instructions or guidelines

- Adjust how images and workers are presented

- Test and preview the modified template before launch

This step-by-step guide makes it easy for Employers to adapt the template to their specific platform policies without needing technical expertise.

Need Something More Customized?

If our default moderation template doesn’t fully match your platform’s requirements, no need to worry! Our team can help you design a template that reflects your unique content policies. Every platform has different standards, and we’re committed to working with you to build a moderation workflow that fits your exact needs.

Our team is always here to collaborate with you in building the perfect solution. Reach out anytime and let’s build a moderation process that truly works for your platform.

Final Thoughts

Content moderation doesn’t have to be complicated or expensive. No matter your industry, be it media, e-commerce, or online learning, our platform empowers you to design moderation workflows that adapt to your unique needs

The opportunity is here: scale your campaigns, streamline your processes, and focus on what matters most—growing your platform with confidence. Why not start today and see how Microworkers can make moderation simpler, smarter, and more effective for you? 😉

No Comments so far.

Your Reply